The evolution of Data Centers.

In the past few years, communication technologies have evolved rapidly. A well-known structure to manage all this data flow is the Data Center. Some obvious devices to it would be telecommunications and storage systems, but there are a few more that, as time went by, have become essential for them as well. Redundant power systems, data communications connections, environmental controls or security devices are some of them.

At the beginning committing to building a data center was a long journey. The whole project could take as much as 25 years and they had inefficiencies in power and cooling systems, no flexibility, low scalability, and the cable management was coating and difficult to understand.

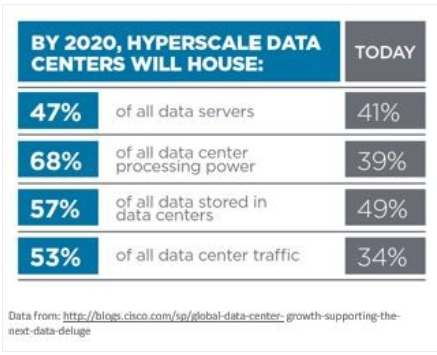

The concept of “hyperscale” entered the market to change the data center paradigm. The core of this idea is to create a new generation of DC’s with the ability to change, scale and grow quickly with minimum impact for both customers and the company.

Taking a look at the Cisco Global Cloud Index we get the idea of the data traffic growth throughout the years and make a guess on how it will look this 2020.

The number of required hyperscale data centers has been increasing nonstop and it looks like this will be the tendency for a while more.

But the main question is, what has changed to provide these new capabilities to data centers?

The server technology has become critical as performance requirements increase. While the market was still using 8Gpbs connections, companies were looking for 16, 25 or even 32GBps. The appearance of FPGA (Field Programmable Gate Array) used for security and performance led to I/O evolving from 10G and 25G to 50G.

When it comes to storage the massive increase in performance introduced by PCIe switching allows servers to have a larger driver count, so the need of servers and switches is reduced, lowering the overall cost.

Switch chips have also been improved, hyperscale demands are driving 200G/400G uplinks. This is leading to changes in cable management and DC infrastructure such as spine and leaf methodology.

Many cloud companies are using silicon architectures (such as GPU) to increase computing speeds.

If the market keeps evolving at this rate, all the technologies mentioned in this article will be outdated in no time and newer and more sophisticated ones will come in to play. This is also indicative of how far we are from reaching the limit.

Alejandro Martí