GPUs in Datacenters

Hi everybody! In today’s post, we are going to discuss the use of GPU in datacenters and how they can accelerate certain tasks.

Using GPUs as a substitute or aid of CPUs when building a datacenter has been a long time coming, more so when a few years ago, it was discovered that for some tasks apart from the traditional ones (Games, Image processing, 3D rendering), the GPU offered greater performance than the traditional CPU, such as for deep learning, big data, etc.

Since then businesses, be it manufacturers of GPUs, cloud services or others, have started offering solutions tailored for those in need of them. Nvidia is one such example.

Nevertheless, the question is, why would you use a GPU rather than a CPU in a datacenter? Why does it make some things faster (and others slower)?

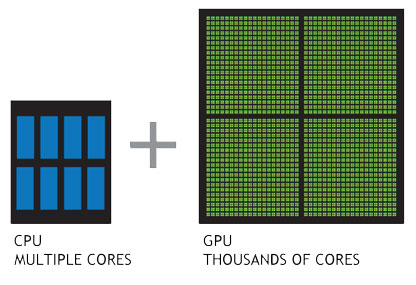

The answer is the number of cores. GPUs trade-off quality for quantity, presenting a much higher number of cores, if of lesser “processing power”, which makes them ideal for tasks that require enormous multiprocessing ability.

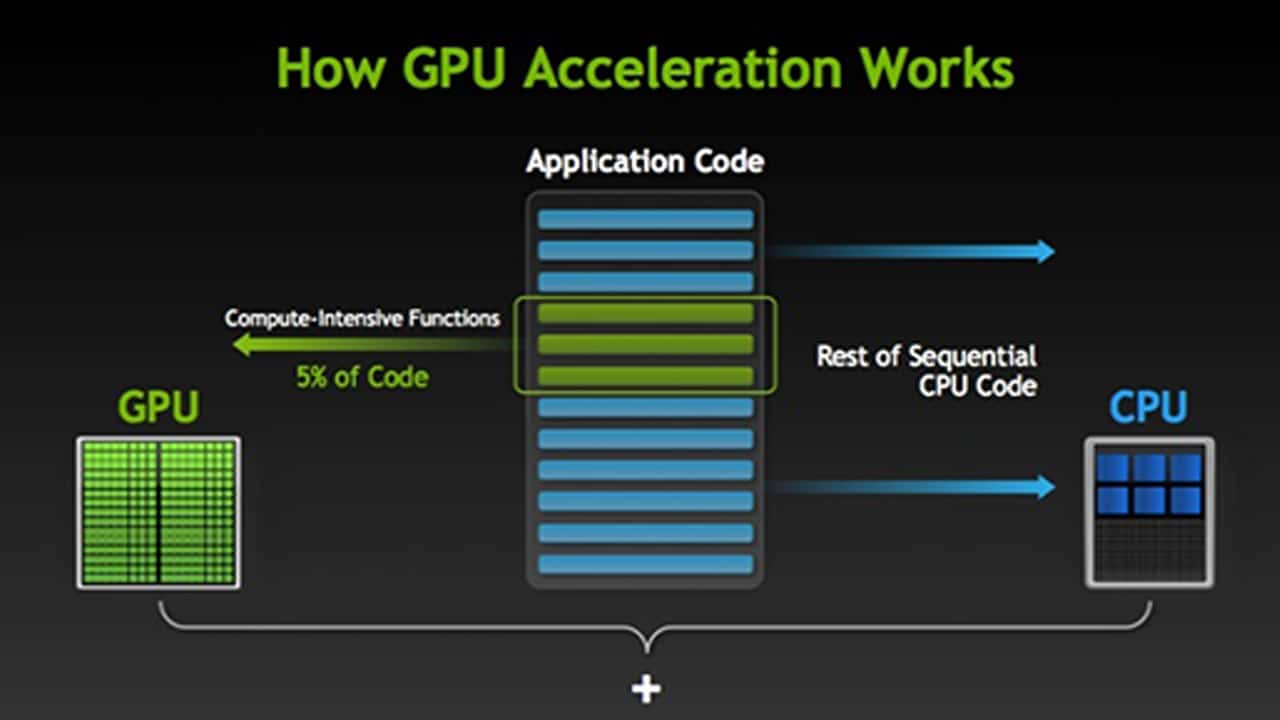

That being said (as shown by the drawbacks), the development does not mean that all CPUs must be substituted for GPUs: they are not a replacement, but an aid. This is what is called GPU-acceleration, the use of CPUs in conjunction with GPUs. In this model, the most compute intensive code is shunted to the GPU for faster processing.

The key factors that make GPUs grow in this sector:

- As the use of GPUs increases performance in some areas, datacenters do not need as many servers as before, resulting in lower costs.

- It supports Virtual Desktops, a common technology in actual datacenters.

- More than 550 applications have been made compatible with Nvidia’s GPUs, the lead manufacturer of this sector.

- Some sequential tasks can also be run through GPU-accelerated devices.

As for the areas where the use of GPUs increases performance, they are many: databases, machine learning, deep learning, data science, crypto mining, and cryptography are only part of a bigger whole. More so, with the increase of data on the Internet, optimization has become a priority, increasing the production of GPU based technology by spades.

However, we must remember that not every application can run through the use of GPU-acceleration, some of them are highly sequential, and the ones that can do it must be without exception, adapted.

In any case, if nothing else, there is proof of the utility of this technology by the fact that it is used by big companies like Google, Amazon, Microsoft, Oracle, etc for their datacenters. Nike, for example, uses MapD's database software to analyze historical sales data and predict demand.

As Nvidia’s director of datacenter GPU computing technology Roy Kim said, "Whether it be GPUs or FPGAs, it's clear that accelerators are the way forward".