Quantum computing in Datacenters

After a long time of advances in fields like mathematics and physics, quantum computing has become a reality, and it is starting to appear (or it has the intention to appear) in the world of datacenters.

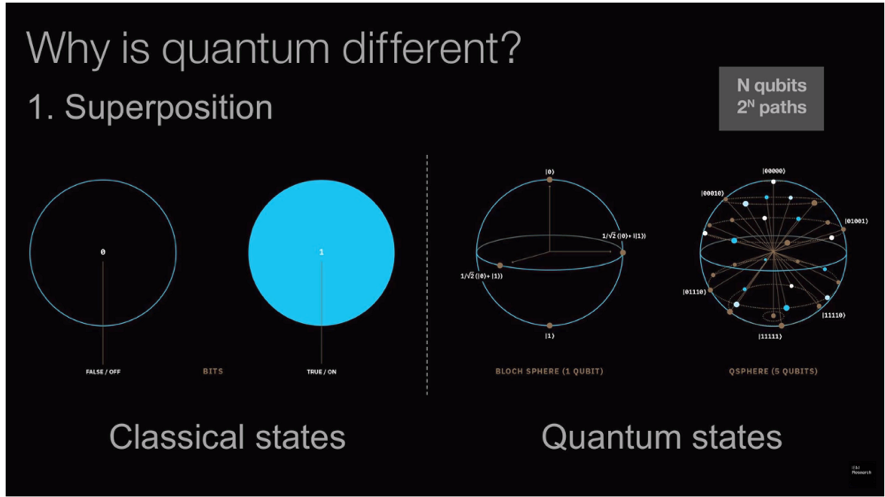

The essential difference between a regular computer and a quantum computer is its working principles: while regular computers use bits that can be either 1 or 0 (binary), quantum computers use “qubits”, that can be a superposition of both 1 and 0 at the same time. This superposition means that when measuring a qubit, it won’t always return the same value (as it isn’t a 100% 1 or a 0); In fact, you can measure two identical qubits and get different returns.

This contrast between regular computers and quantum computers makes it difficult to make both work together as, by default, they do not understand each other. Moreover, as quantum computing is still a new and not well understood area, it has some important drawbacks:

- In some cases, output may be read incorrectly: Google’s quantum computer (named bristlecone) demonstrated 1% error for readout.

- Needs specialized equipment to function correctly (extremely low temperatures, usually)

- A lot more expensive than a regular computer.

- Regular programs cannot run in these computers: new ones have to be created specifically for them (at this moment, there are almost no programs).

This technology, in the context of datacenters, is difficult to talk about, because it has not yet reached the point where it is being implemented commercially and as such, it does not create value the same way using GPUs does, even though the concept that wants to embody is similar (a support of regular computing).

It is also important to state that currently, quantum computing is not a miracle technology: The different principles in which quantum computer works allow it to perform extremely well in some cases, but it fails to outperform traditional computers in many other cases, partly because it is not fully understood and reliable in some areas (as we have said before) and partly because some tasks simply do not benefit from such characteristics (depends on the algorithm). What is more, the current quantum computers are in regular computing equivalent to the first processors that were ever made (less than a hundred of qubits).

That being said, these are some areas in which this type of computing excels:

- Optimization: Can crunch through vast number of potential solutions fast.

- Cryptography: Depending on the encryption algorithm, it can decrypt messages extremely fast (asymmetric encryption mostly).

- Big data: It scales very well with data (Quantum computers can easily handle large amounts)

- Simulations: Quantum processes, matter behaviour on a molecular level, etc.

As with many new technologies, quantum computing is not setting itself as a substitute of traditional computing (although it’s a new field, so it could happen), but an aid. Nowadays, It has reached the point where there are quantum computers for sale, if for a high price (D-Wave 2000Q, for 15 milions) and some can even be used casually. As such, it is not farfetched to imagine how, in the future, datacenters could use this type of computing to increase their performance.